During an upgrade project to Sitecore 9, I got some insights worth sharing. Some findings in this post applies to multiple Sitecore versions and some are specific to Sitecore 9. I’ve been using SolrCloud 6.6, but some of it applies to other versions as well. It be came a long, yet very abbreviated, post covering many areas.

In this post:

- Solr Managed schemas in Sitecore 9

- Tune and extend Solr managed schema to fit your needs

- How to fix Sitecore config for correct Solr indexing and stemming

- How to make switching index work with Solr Cloud

- How to reduce index sizes and gain speed using opt-in

- How to make opt-in work with Sitecore (bug workaround)

- Why

(myfield == Guid.Empty) won’t give you the result you’re expecting

Working with managed schemas

From Sitecore 9, the “Generate the Solr Schema.xml file” command in the Control Panel is gone. Instead there’s a new “Populate Solr Managed Schema” command. It’ll use the REST interface of Solr to update the schema file. Solr can share collection configurations. This essentially means that you can have multiple collections (formerly known as cores) sharing the same configuration and this is usually the case. Here’s a first finding: It can conflict with the Populate Solr Managed Schema command, as it’ll list all you indexes and let you update them all at once. This may result in a raise condition, as Sitecore will send multiple commands to update the schema, for multiple indexes, that are sharing the same schema. To work around this, you should instead check only one of the indexes and uncheck all the others and click update. Solr will make sure all the others are the same anyway. If you don’t use shared Solr config like ZooKeeper, you obviously need to run this for all indexes, and in that case you can run them all at once.

![Generate Solr schema.xml]()

Generate Solr Schema.xml in the Control Panel up to Sitecore 8.2

![Populate Solr Managed Schema]()

Populate Solr Managed Schema in the Control Panel of Sitecore 9

![Populate only one index]()

When using shared configuration in Solr, you should populate the schema for only one index. Otherwise they may collide.

So, what will Sitecore do with the Solr managed schema when this process is execute? It’ll add and update the required fields to the schema to make sure Sitecore items can be indexed properly. You’ll probably find that you want to make additional changes to schema. Previously we could just change the schema.xml file to whatever we want. You can still do this the old way by setting the <schemaFactory> class to ClassicIndexSchemaFactory in the solrconfig.xml file in your Solr instances and manage your schema.xml manually. However, once you get the hang of the new way of working with managed schemas, you’ll probably find it better. Especially when working with multiple environments and Solr clusters. You’ll find it much easier to just let Sitecore update everything from the Control Panel, instead of playing around with schema.xml files and ensure they are up to date, distributed and loaded properly into all Solr instances.

So how do we tune the managed schema to what we want? Well, you could just manually call the Solr API, but it would make more sense to have the application specific configuration together with our Sitecore application code. That’ll make a solution less fragmented and easier to keep all environments in sync. Sitecore does this in the <contentSearch.PopulateSolrSchema> pipeline. You can either replace the existing Sitecore.ContentSearch.SolrProvider.Pipelines.PopulateSolrSchema.PopulateFields processor, given that you provide the necessary functionality, or add your own processor. I found it easier to replace the existing one as I found a couple of errors in the built in one that I needed to address anyway. Some of the errors are covered in this post, so I’ll describe the replace scenario here.

You can patch the default processor like this:

CustomPopulateSolrSchema.config

<?xml version="1.0" encoding="utf-8" ?>

<configuration xmlns:patch="http://www.sitecore.net/xmlconfig/" xmlns:search="http://www.sitecore.net/xmlconfig/search/">

<sitecore search:require="Solr">

<pipelines>

<contentSearch.PopulateSolrSchema>

<processor type="Your.Namespace.PopulateSolrSchemaFields, Your.Assembly"

patch:instead="processor[@type='Sitecore.ContentSearch.SolrProvider.Pipelines.PopulateSolrSchema.PopulateFields, Sitecore.ContentSearch.SolrProvider']"/>

</contentSearch.PopulateSolrSchema>

</pipelines>

</sitecore>

</configuration>

Then you’ll need to implement the new PopulateSolrSchemaFields class and make an implementation of the ISchemaPopulateHelper interface:

PopulateSolrSchemaFields.cs

namespace Your.Namespace

{

public class PopulateSolrSchemaFields : Sitecore.ContentSearch.SolrProvider.Pipelines.PopulateSolrSchema.PopulateFields

{

protected override Sitecore.ContentSearch.SolrProvider.Pipelines.PopulateSolrSchema.ISchemaPopulateHelper GetHelper(SolrNet.Schema.SolrSchema schema)

{

Assert.ArgumentNotNull(schema, "schema");

return new YourSchemaPopulateHelper(schema);

}

}

public class YourSchemaPopulateHelper : Sitecore.ContentSearch.SolrProvider.Pipelines.PopulateSolrSchema.ISchemaPopulateHelper

{

private readonly SolrSchema _solrSchema;

public YourSchemaPopulateHelper(SolrSchema solrSchema)

{

Assert.ArgumentNotNull(solrSchema, "solrSchema");

_solrSchema = solrSchema;

}

// Implementation goes here.

// Look at and copy stub code from Sitecore.ContentSearch.SolrProvider.Pipelines.PopulateSolrSchema

}

}

You can reflect the default implementation Sitecore.ContentSearch.SolrProvider.Pipelines.PopulateSolrSchema.SchemaPopulateHelper in Sitecore.ContentSearch.SolrProvider.dll to get an idea of how it works. You’ll probably find that the reflected code for GetAddFields() and GetReplaceTextGeneralFieldType() isn’t very readable. I refactored this slightly so it became much more readable and managable without changing the logic. Perhaps the original code is readable too, but was just messed up by the compiler.

Looking at bit deeper into GetAddFields(), I found a couple of errors in Sitecores implementation, that is now also comfirmed bugs as by Sitecore. There may be differences between versions and releases of Sitecore, but I’ve found the following mapping errors in many 8.x and in all three 9.x releases. So check these in your configuration:

"*_t_en" is incorrectly mapped to "text_general". This should be mapped to "text_en""*_t_cz" is incorrectly mapped to "text_cz". This is initially a bug in Solr referring to Czech. The language code for Czech is "cs". So the correct mapping should be "*_t_cs" to "text_cz", given that you leave the name of the default Solr text stemming configuration for Czech as is."*_t_no" is mapped to "text_no". “Norwegian” is typically referred to as “Nynorsk” (nn) or “Bokmål” (nb). To make this work better, two additional mappings are needed here. Unless you have specific/custom stemming rules in Solr, you can map both "*_t_nn" and "*_t_nb" to "text_no".- An array of

DateTime is mapped as "{0}_dtm" in Sitecore.ContentSearch.Solr.DefaultIndexConfiguration.config, but there is no mapping provided for "*_dtm", so a multi-value date field needs to be added.

You’ll also notice that the format for updating managed schemas is different from the schema itself. The class generates xml snippets that are sent as update commands to Solr. A very annoying thing is that incorrect update commands are just silently accepted. You’ll get a “success”-message in Sitecore regardless if your update snippets are correct and stored in Solr or not. So make sure you read the managed schema file in the Solr UI to verify that the changes have actually been stored.

![Populate Schema Success]()

Sitecore may show a success message even when the managed schema update are not applied in Solr.

A good example of the differences between the schema.xml format and the xml structure for updating it, can be found in the GetReplaceTextGeneralFieldType() method. This is also a method worth replacing. Looking closer to its content, it configures text_general to use the default stop words and synonyms filter. Those contains English text in a default setup. This doesn’t really make much sense. I believe text_general should be used for languages where there is no stemming support in Solr. So I suggest you remove the stopwords and potentially the synonyms filter.

![Solr Managed Schema]()

Look into the managed schema stored in your Solr instance/cluster to ensure your config changes has been applied properly.

You may also find solr.WordDelimiterGraphFilterFactory very useful for stemming things like product names, trade marks, phone numbers, SKU numbers etc. I believe an equivalent filter was configured as default in Solr 5.3, but in 6.6 it’s not. I found myself working through all the Solr analyzers for all the language definitions to have good search results. Time consuming, but well worth it.

Here’s a sample of Solr schema configuration for a Swedish stemmed text field type, leveraging from the WordDelimiterGraphFilterFactory:

<fieldType name="text_sv" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" format="snowball" words="lang/stopwords_sv.txt" ignoreCase="true"/>

<filter class="solr.WordDelimiterGraphFilterFactory" catenateNumbers="1" generateNumberParts="1" splitOnCaseChange="1" generateWordParts="1" splitOnNumerics="1" catenateAll="0" catenateWords="1"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.SnowballPorterFilterFactory" language="Swedish"/>

</analyzer>

</fieldType>

To achieve the configuration above, by letting Sitecore update the managed schema, you actually need to produce the XML snippet below in an implementation of ISchemaPopulateHelper interface that Sitecore sends to the Solr API.

<replace-field-type>

<name>text_sv</name>

<class>solr.TextField</class>

<positionIncrementGap>100</positionIncrementGap>

<analyzer>

<tokenizer>

<class>solr.StandardTokenizerFactory</class>

</tokenizer>

<filters>

<class>solr.StopFilterFactory</class>

<ignoreCase>true</ignoreCase>

<words>lang/stopwords_sv.txt</words>

<format>snowball</format>

</filters>

<filters>

<class>solr.WordDelimiterGraphFilterFactory</class>

<catenateNumbers>1</catenateNumbers>

<generateNumberParts>1</generateNumberParts>

<splitOnCaseChange>1</splitOnCaseChange>

<generateWordParts>1</generateWordParts>

<catenateAll>0</catenateAll>

<catenateWords>1</catenateWords>

<splitOnNumerics>1</splitOnNumerics>

</filters>

<filters>

<class>solr.LowerCaseFilterFactory</class>

</filters>

<filters>

<class>solr.SnowballPorterFilterFactory</class>

<language>Swedish</language>

</filters>

</analyzer>

</replace-field-type>

As I mentioned above, the compiled and reflected code of this isn’t very readable, so I created a small fluent pattern to generate this configuration. I’m happy to share that code, but I assume Sitecore will have a preferred way of working with this in a near future release. @Sitecore: Please reach out to me if you’re interested in incorporating my solution into the product.

SolrCloud

Solr includes the ability to set up a cluster of Solr servers that combines fault tolerance and high availability. It’s now called SolrCloud, and has the capabilities to provide distributed indexing and search, with central configuration, automatic load balancing and fail-over. SolrCloud is not a cloud service. It’s just a version of Solr. You can run the same SolrCloud application and index index configuration on a single instance on your local dev machine, as well as on your large scale, sharded and clustered, production instances. Worth noticing is that Solr “cores” are called “collections” in SolrCloud. A “core” in SolrCloud is just a shard or replica of a collection.

To make Sitecore work well with Solr, you should use switching indexes. Otherwise your indexes will be blank during rebuilds! To make this work with SolrCloud, you’ll need to use the Sitecore.ContentSearch.SolrProvider.SwitchOnRebuildSolrCloudSearchIndex index type. The idea is that you have two Solr collections for every index together with two aliases. The main alias points to one of the indexes and a rebuild alias points to the other index. So after index rebuild, Sitecore will swap the two aliases. This means a fully functional index is always available even when rebuilding the index.

The constructor of SwitchOnRebuildSolrCloudSearchIndex uses a few more arguments to make this work. When this is defined in the config files, the constructor arguments are just listed as a <param> list. This makes it a bit tricky to patch properly, since it’s somewhat tricky to ensure the order of the <param> list from Sitecore patch files. What’s worse is that if you get those arguments wrong, Sitecore just won’t start. You can’t even use the /sitecore/admin/ShowConfig.aspx page. Doh!

Sitecore 9 also introduced a new bug regarding switching indexes. Currently you must set the ContentSearch.Solr.EnforceAliasCreation setting to true. Otherwise, Sitecore won’t update the Solr index aliases properly after a rebuild. But ensure not to do this on your Content Delivery servers. Otherwise you’ll end up with multiple servers switching the aliases ending up in undefined states.

Another common issue with Solr is that commits of large updates takes longer time than the default timeout, causing exceptions on the Sitecore client side. We can simply increase the timeout by changing the ContentSearch.Solr.ConnectionTimeout setting. Note that the timeout is specified in milliseconds, so 600000 will give a 10 minute timeout. The final commit after a full index rebuild can easily take a few minutes.

Resently I became aware of another setting, ContentSearch.SearchMaxResults, that you really should set. This setting tells Sitecore how many rows should be returned from Solr, unless you specify a .Take(n) Linq expression in your queries. By default, the value of this field is empty, causing Sitecore to ask for int.Max, i.e. 231-1 = 2,147,483,647, rows. This is not good practice, as the resulting rows will be read into memory. You should set this to a more reasonable value, such as 1000 or similar.

If you’re using SolrCloud 6.6 in a clustered and sharded environment you might also run into the following strange behavior, unless you reduce SearchMaxResults. It turns out that Solr is a bit buggy when rows gets close to int.Max. When a call to Solr has the rows parameter set to int.Max (231-1), I got a strange "NegativeArraySizeException" from Solr. If I send int.Max+1 (231), I get a more sensible NumberFormat exception from Solr and that’s completely fine. If I send int.Max-1 (231-2) I get an IllegalArgumentException, with the message “maxSize must be <= 2147483630" (231-17). If I send that specified max number, I get a java heap OutOfMemoryError… Running SolrCloud on a single server instance, nothing of this is reproducible. Very strange… and you may not get much valuable data about this on the Sitecore side… So just set ContentSearch.SearchMaxResults and you’re good to go.

If you, like me, prefer reading code, the above can be summarized in a config template like this:

<?xml version="1.0" encoding="utf-8" ?>

<configuration xmlns:patch="http://www.sitecore.net/xmlconfig/" xmlns:set="http://www.sitecore.net/xmlconfig/set/"

xmlns:role="http://www.sitecore.net/xmlconfig/role/" xmlns:search="http://www.sitecore.net/xmlconfig/search/">

<sitecore search:require="Solr">

<sc.variable name="solrIndexPrefix" value="my_sc9_prefix" />

<settings>

<setting name="ContentSearch.Solr.ConnectionTimeout" set:value="600000" />

<setting name="ContentSearch.Solr.EnforceAliasCreation" set:value="true" role:require="ContentManagement or Standalone" />

<setting name="ContentSearch.SearchMaxResults" set:value="1000" />

</settings>

<contentSearch>

<configuration>

<indexes>

<index id="sitecore_master_index" type="Sitecore.ContentSearch.SolrProvider.SwitchOnRebuildSolrCloudSearchIndex, Sitecore.ContentSearch.SolrProvider" solrPrefix="$(solrIndexPrefix)"

role:require="ContentManagement or Standalone">

<param desc="name">$(id)</param>

<param desc="mainalias">$(solrPrefix)_$(id)</param>

<param desc="rebuildalias">$(solrPrefix)_$(id)_Rebuild</param>

<param desc="collection">$(solrPrefix)_$(id)_1</param>

<param desc="rebuildcollection">$(solrPrefix)_$(id)_2</param>

<param ref="contentSearch/solrOperationsFactory" desc="solrOperationsFactory" />

<param ref="contentSearch/indexConfigurations/databasePropertyStore" desc="propertyStore" param1="$(id)" />

...

...

</index>

</indexes>

</configuration>

</contentSearch>

</sitecore>

</configuration>

Solr Opt-in

By default, Sitecore indexes all fields. This means that Sitecore will send the content of all fields to Solr for indexing, expect the ones that you explicitly exclude from indexing (Opt-out). This may be ok for small solutions, but for large solutions, this will cause indexes to become very large and indexing to be CPU heavy and time consuming.

If you don’t make queries on a field or use the stored result, you don’t need it in the index. So why index it then? It’s just a waste of resources. Note that free text search isn’t affected by this, as this is typically made on computed fields, such as _content or a more suitable computed field you make yourself.

The <indexAllFields> element controls this in the <indexConfiguration> section. When setting it to false, Sitecore will only index the fields you explicitly include for indexing (Opt-in). I’d recommend you set this to false when starting new projects, since changing this to false later on could potentially require code refactoring and a lot of testing.

Sitecore has confirmed a bug in AbstractDocumentBuilder<T> when using opt-in. There is an if-statement in the AddItemFields() method, that only loads all field values when using opt-out. So when using opt-in, fields with null values won’t be properly indexed. This means that values coming from standard values, clones or language fallback won’t be indexed. This seems to apply to most versions of Sitecore, but luckily it’s a very simple patch:

SolrDocumentBuilder.cs

public class SolrDocumentBuilder : Sitecore.ContentSearch.SolrProvider.SolrDocumentBuilder

{

public SolrDocumentBuilder(IIndexable indexable, IProviderUpdateContext context)

: base(indexable, context)

{

}

protected override void AddItemFields()

{

Indexable.LoadAllFields();

base.AddItemFields();

}

}

SolrDocumentBuilder.patch.config

<configuration xmlns:patch="http://www.sitecore.net/xmlconfig/" xmlns:set="http://www.sitecore.net/xmlconfig/set/" xmlns:role="http://www.sitecore.net/xmlconfig/role/" xmlns:search="http://www.sitecore.net/xmlconfig/search/">

<sitecore role:require="Standalone OR ContentManagement OR ContentDelivery OR Processing OR Reporting" search:require="Solr">

<contentSearch>

<indexConfigurations>

<defaultSolrIndexConfiguration type="Sitecore.ContentSearch.SolrProvider.SolrIndexConfiguration, Sitecore.ContentSearch.SolrProvider">

<documentBuilderType>Your.Namespace.SolrDocumentBuilder, Your.Assembly</documentBuilderType>

</defaultSolrIndexConfiguration>

</indexConfigurations>

</contentSearch>

</sitecore>

</configuration>

Going for an opt-out solution does give you some more work, so is there a real gain of the opt-out approach? I recently converted one of our existing solutions from opt-out to opt-in principle. The size of the Solr indexes was reduced from almost 70GB to 3.5GB. Index time was reduced from 4 hours to 30 minutes on a 16 core/64GB RAM server. I think those figures speaks for themselves and your queries will be faster too.

Tricky ContentSearch Linq to Solr bug

Recently I noticed a Sitecore ContentSearch query that didn’t return the result I was expecting. It took a while to really get a hold of what was really going on. I can’t fully disclose what kind of queries I’m performing in that project, so I’ve translated this into a more generic scenario that I also hope makes it easier to understand the issue.

Let’s say you have a site with a lot of pages and you want to generate a sitemap.xml page. You can do this by querying all your indexable pages that has a layout and so on and just make an xml output of it. Now, let’s say you have some deprecated pages, duplicate content or whatever that you don’t want to be indexed. A way of solving that could be to have a canonical link to an alternative page containing the equivalent content. That can simply be stored in a DropLink Sitecore field and we render it as a <canonical /> html tag.

Obviously we don’t want to include those pages having a canonical link pointing to an alternative page in the sitemap xml. So we simply construct our Sitecore ContentSearch query like this, right?

var pages = searchContext.GetQueryable<SitemapDocument>()

.Filter(f => f.IsLatestVersion && // More filters here obviously to get only pages with layouts etc...

f.CanonicalLink == Guid.Empty)

.GetResults()

.Select(r => r.Document)

...

...

public class SitemapDocument

{

[IndexField("_latestversion")]

public bool IsLatestVersion { get; set; }

[IndexField("canonicallink")]

public Guid CanonicalLink { get; set; }

...

...

}

Well, it turns out that the above doesn’t work! Why?

Well, Guid isn’t a nullable type, so Guid.Empty is actually {00000000-0000-0000-0000-000000000000} and is indexed as 00000000000000000000000000000000. I’ll abbreviate this as 000… from now on. So, when Sitecore indexes a blank Guid field, it’ll index it as 000… When searching a field == Guid.Empty, like in the sample code above, Sitecore will make a Solr query looking like this:

?q=*.*&fq=(_latestversion:(True) AND canonicallink_s:(000....)...

This means that if an item doesn’t have the Canonical link field, it won’t be returned.

I’ve tried all sorts of ways to solve this. I’ve tried making the property a nullable Guid, making it a string field and so on. So have Sitecore support and this is now a confirmed bug (though I’m not sure the root cause was properly provided to product department).

I think that an empty guid should never be stored as 000… in the index. If there is no guid, there should be no data in the index either, i.e. null. Likewise, if 000…. is actually stored in a guid field it should also be indexed as null. At query time, I think a field == Guid.Empty statement should be serialized to -field_s:*. This would make the query behavior consistent and somewhat reduce the index size as well.

The best way I’ve found to workaround this for now, is to exclude (or not include if you’re following my recommendations above) the canonical field from the index entirely and replace it with a computed field that’ll always return the link Guid, or Guid.Empty regardless if the field is empty or not present on the item being indexed. Never return null. That way the query will always return correct data.

Note that the inverted query works just fine. A query like field != Guid.Empty does give the expected result.

Closing

Okay, this was a very long first post of my 6th year as a Sitecore MVP. Hope you find it valuable and please share your thoughts on this. I’d love to hear your experiences in this area. Have you all ran into these issues too, or is it just me pushing some boundaries again?

Btw, have you noticed the Index Manager dialog result message, “Finished in X seconds”? The value isn’t accurate when building multiple indexes. Do you see what’s wrong? It’s a confirmed bug too, but I doubt it’ll ever be corrected, and I’m perfectly fine with that. It’s a cosmetic bug well below minor level.

Regarding organising content, one has to decide if one shared tree is used for all languages or if they could diverge. We typically create one tree per market, where some markets may use more than one language, such as Switzerland, Canada and so on, having two or more languages. On such markets, the client typically wants the same content and structure just translated into two or more languages, compared to other markets.

Regarding organising content, one has to decide if one shared tree is used for all languages or if they could diverge. We typically create one tree per market, where some markets may use more than one language, such as Switzerland, Canada and so on, having two or more languages. On such markets, the client typically wants the same content and structure just translated into two or more languages, compared to other markets.

Sitecore have awarded

Sitecore have awarded

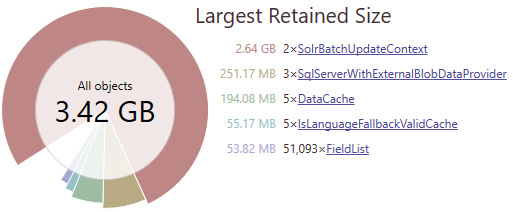

By default, Sitecore stores media files as blobs in the database. This is usually good, but if you have a large volume of files, this can become too heavy to handle. So I wrote a Sitecore plugin where you can seamlessly store all the binaries in the cloud.

By default, Sitecore stores media files as blobs in the database. This is usually good, but if you have a large volume of files, this can become too heavy to handle. So I wrote a Sitecore plugin where you can seamlessly store all the binaries in the cloud.